- Home

- Azure Data

- Modernization Best Practices and Reusable Assets Blog

- How to handle Large Objects (LOBs) migration from Db2, Oracle to Azure SQL & Datalake - Part 1

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Introduction

Database modernization from Db2 and Oracle tables with LOB datatypes to Azure SQL PaaS offering (DB / MI) or SQL Server needs careful planning and at the same time provides an opportunity to optimize your database. Having TBs or PBs of LOB data stored as a part of any database has space implications as well as other maintenance overheads.

In this technical blog, we are discussing the approach of storing the LOB data as Azure Datalake storage blob object and location of the blob object in Azure SQL DB Table. This approach allows reduced time to perform maintenance, provides additional flexibilities on backup / DR / storage tier and significantly reduces the size of the SQL DB. This blog references Db2 z/OS as source but the same approach can be extended to other Db2 flavors or other databases like Oracle.

This blog outlines how to copy BLOB data from Db2 z/OS database (same concept applies for Db2 LUW, Db2 i, Oracle, etc.) to Azure using Azure Data Factory and LogicApp. The approach outlined here is low-code/no-code solution with ease-of-maintenance.

To identify the compatible SQL target database, you can use conduct an assessment using SSMA for Db2. Once the SQL database is determined, SSMA can also be used to convert schema and other database objects. A step-by-step guidance is here.

Azure Data Factory is a managed cloud service that's built for these complex hybrid extract-transform-load (ETL), extract-load-transform (ELT), and data integration projects. It has Db2 connector and allows bulk copy.

Azure Logic Apps is integration PaaS for automating workflows.

In our solution, we have considered ADF Copy activity with db2connector, Db2 as source and Azure SQL as Sink. ADF performs bulk copy so getting data to Azure SQL will be much quicker, once data is present in Azure SQL, serverless solution LogicApp performs blob object creation and metadata population.

General Overview

This solution helps in transferring LOB data residing in Db2 table to ADLS Gen2 storage account and update blob metadata information to Azure SQL DB. This approach allows to store LOB data in Azure storage account and reference the same from Azure SQL database via blob object metadata. For data tier modernization projects Db2 will usually be present in on-premises environment. ADF provides an ability for bulk copies and an ability to parallelize the copy to Azure.

For easiness we have divided this into 2 stages.

1st stage is to copy Db2 table data to Azure SQL using ADF.

2nd stage is to copy data from Azure SQL to Azure Datalake Storage and metadata in Azure SQL.

Stage1 - Migrate Data to Azure SQL

Setup Azure Data Factory Self Hosted Integration Runtime (SHIR) on machine which has access to Db2 / On-Prem database.

Create Azure SQL Database.

Create Linked Service for Db2.

Create Link Service for Azure SQL DB.

Create ADF Pipeline with Copy activity which has Db2 as source and Azure SQL DB as sink.

Stage 2 - Optimize LOB Data Storage

Create a LogicApp workflow to read Azure SQL DB and create a blob in Azure Storage Account.

Update blob object metadata information to Azure SQL DB.

Below diagram describes high level architecture of the approach.

High level architecture to Copy Data from Db2 to Azure

Components present in above diagram:

- For testing purposes, Db2 on-prem database is installed on z/OS.

- ADF Self Hosted Integration Runtime installed on VM which has connectivity to Db2 z/OS.

- ADF Pipeline can be triggered based on external event or scheduled on definite frequency. Created a lookup table so multiple threads of the pipeline can be executed. ADF copy activity will have Db2 connector as source to get data from Db2 and Azure SQL DB as sink to write data received.

- Azure SQL DB will store data copied from Db2.

- As stage 2, when ADF copy is complete invoke LogicApp workflow.

- Create LogicApp workflow which fetches data from Azure SQL and creates Azure Storage blob (one record to one blob in this use case) in Azure Storage account.

- Azure SQL DB is updated with Azure storage Blob metadata so application can refer to the same.

Configurations

Create linked service to Db2

Azure data factory Db2 connector can be used to create linked service to Db2 LUW with details Db2 database hostname, port number, Mainframe RACF user id and password. Detailed instructions for the same can be found here.

Create Linked Service to Azure SQL DB

Azure data factory Azure SQL DB connector can be used to create linked service to SQL DB. Instructions for the same can be found here.

Source Table Information Db2 :

CREATE TABLE "dba1"."BLOB_TABLE " (

"ID" INTEGER WITH DEFAULT NULL,

"PK_DB2" INTEGER NOT NULL,

"IMAGEBLOB" BLOB(50000) WITH DEFAULT NULL,

CONSTRAINT "BLOBTABLE_PK" PRIMARY KEY

("PK_DB2")

)

Target Table Information on Azure SQL :

SET ANSI_NULLS ON

GO

SET QUOTED_IDENTIFIER ON

GO

CREATE TABLE [dbo].[BLOB_TABLE ](

[IDENTITY_ID] [int] IDENTITY(1,1) NOT NULL,

[PART_ID] [int] NOT NULL,

[DB2_PK] [int] NOT NULL,

[IMG_BLOB_CONTENT] [varbinary](max) NULL

) ON [PRIMARY]

GO

Metadata Table definition on Azure SQL

Alternatively, we can also use same source table schema with 2 additional columns to hold Path and Image Blob Id.

SET ANSI_NULLS ON

GO

SET QUOTED_IDENTIFIER ON

GO

CREATE TABLE [dbo].[BLOB_Metadata](

[IDENTITY_COL] [int] IDENTITY(1,1) NOT NULL,

[DB2_PARTID] [int] NULL,

[DB2_PK] [int] NULL,

[PATH] [varchar](200) NULL,

[IMAGE_blob_ID] [varchar](200) NULL

) ON [PRIMARY]

GO

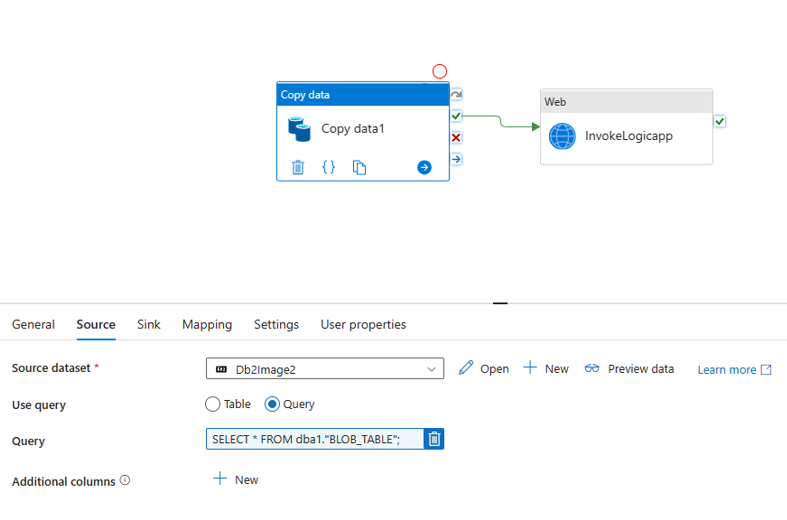

Copy data from Db2 to Azure SQL DB using single Copy Activity

In ADF pipeline, included Source as Db2 and use the linked service created in previous steps.

If source data is huge then we might want to use Lookup table approach and invoke parallel copies.

In this case, we have considered to copy entire Db2 to Table to Azure SQL in a single copy activity.

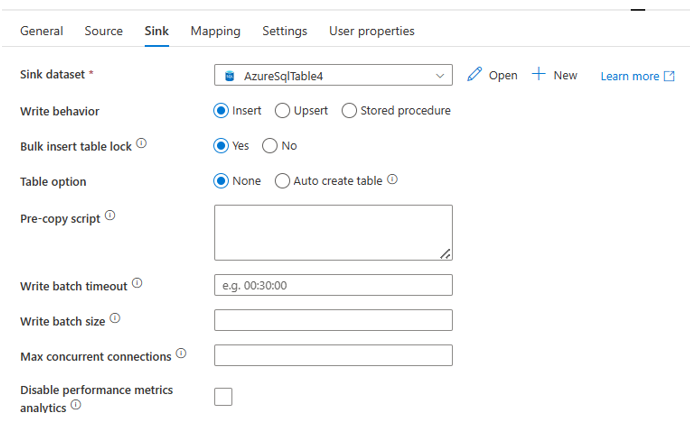

Sample set up on Sink (Azure SQL)

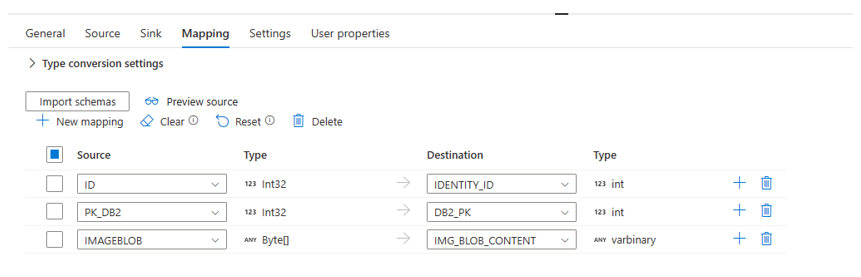

Mapping of Db2 columns to Azure SQL table columns.

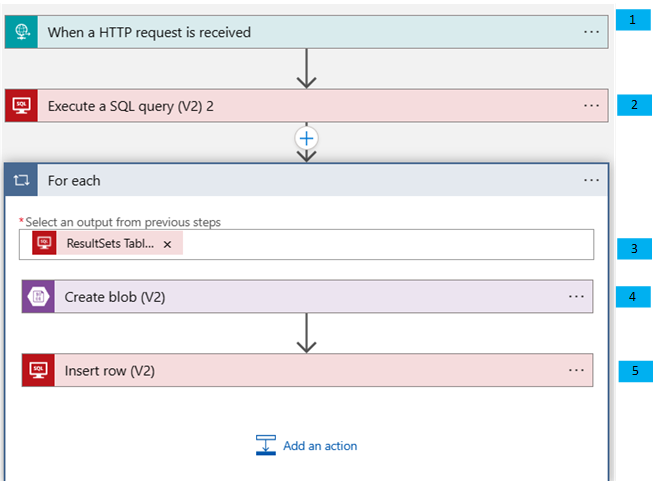

LogicApp workflow setup

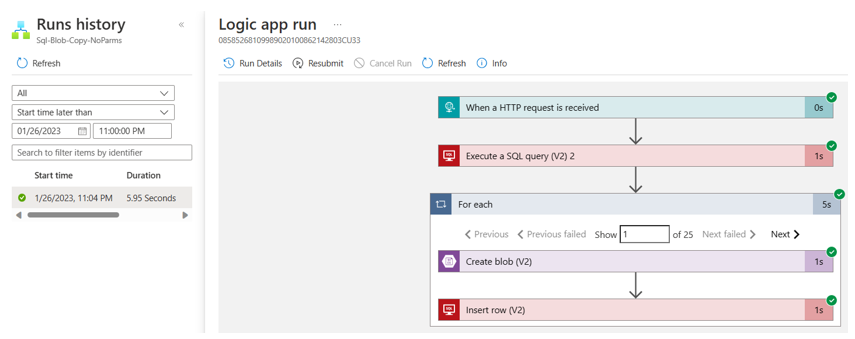

Below is the LogicApp setup created for testing.

Components present in above diagram:

- Invoking LogicApp from ADF using HTTP request / API method. In current example this will help us to integrate ADF pipeline so invoking it after copy activity is complete.

- Execute SQL Query activity is used to fetch data from BLOB_TABLE in this case. Here alternatively we can use “Get Rows” activity as well.

- For Each activity, reads Exec SQL query activity output as an array and performs the steps mentioned in block of For Each.

- Create Blob activity creates a blob object in Azure storage account mentioned. Here we read the Exec SQL query outputs.

- Insert row activity creates a new row in BLOB_Metadata to populate blob object metadata.

Step1: HTTP Request is received - trigger

LogicApp has multiple triggers like Recurrence trigger (at certain frequency), File watcher and HTTP request end point trigger. In this case we have utilized HTTP Request end point trigger as we need to integrate it with ADF.

On creation of “When a HTTP request is received” activity, a HTTP POST URL is generated. Copy this HOST POST URL and we will update this in ADF Invoke Logicapp activity at later end of this document.

In our case, we are not passing any parameters so JSON schema is very simple.

Sample JSON Schema:

If we want to pass any additional payload in HTTP request the same needs to be added accordingly.

{

"properties": {

"body": {

"properties": {},

"type": "object"

},

"headers": {

"properties": {

"Content-Type": {

"type": "string"

}

},

"type": "object"

},

"method": {

"type": "string"

},

"url": {

"type": "string"

}

},

"type": "object"

}

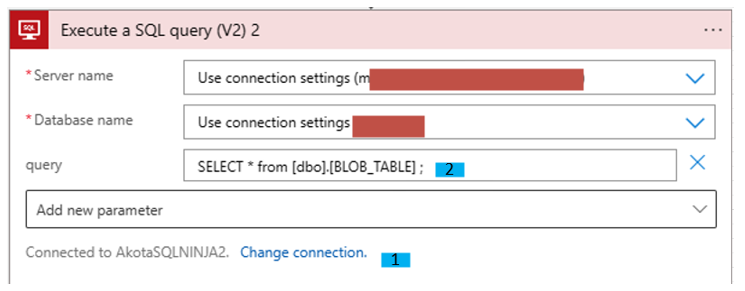

Step2: Execute a SQL Query

In this activity, we are reading the content of BLOB_TABLE from Azure SQL DB. Alternatively we can use Get Rows activity also.

- We need to create connection parameter by clicking “Change Connection”.

- Provide the SQL query to fetch the data which we are intending to create as b object in Azure storage account.

In our case as entire BLOB_TABLE is in scope, we have given ‘select * from dbo.BLOB_TABLE;’

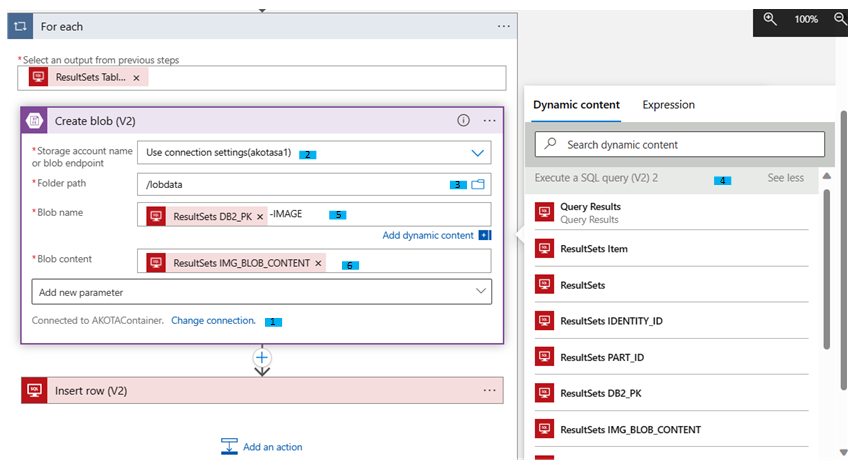

Step3: For Each Control

Once we fetch data from SQL table for every row (LOB data type in the row) we need to create an Azure storage blob object, so we need to loop it through for all the records that are fetched.

Under “Select an output from previous steps”,

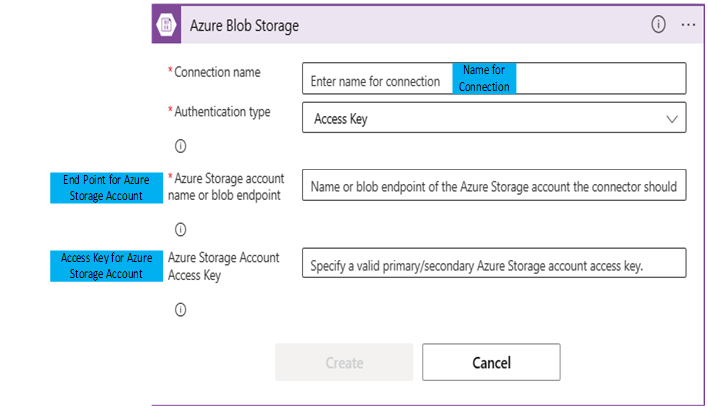

Step4: Create Blob

In For Each, add an action named “Create Blob”.

Here we need to perform below steps.

- In “Change Connection”, Provide the connection details for Storage account as referred below.

- In dropdown select the storage account name.

- Click on folder symbol on right hand side an select the Azure storage Container in which you want to create the Azure blob objects.

- Blob Name – It’s the name with which blob object will be created. In our sample, I want to create Blob object with name of Db2 Primary Key. In order to fetch Db2 Primary key for the array item, click on “Add Dynamic Content” and then in popup select the column from SQL table we want to use here. In this case, DB2_PK is the column name.

- For Blob Name- select the element from “Add Dynamic Content” and I have suffixed it with -IMAGE. So for example, if 1234 is DB2_PK for current array item then blob object will be created as 1234-IMAGE.

- Blob Content – It’s the content for Blob object. In our case, its IMG_BLOB_CONTENT column of BLOB_TABLE table.

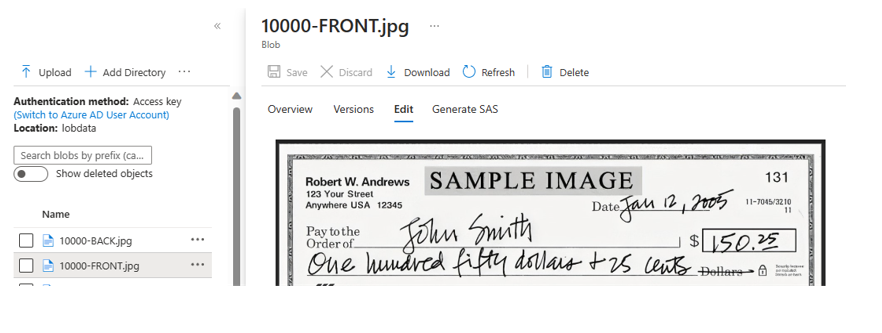

Once above steps are executed, we should be seeing a blob object in Azure storage account.

Step5: Insert into SQL Table for Metadata

In this step we are creating a blob object metadata table so application can refer to the metadata information and fetch the image from Azure Storage.

With in For Each block, select SQL server activity with “Insert row’ action. Once its Add an activity called “Insert row”.

- Click on Change Connection and provide details of Azure SQL Db.

- Once a new connection is created, in the dropdown select Server name, Database Name and the Metadata Table name. In this example, its BLOB_Metadata. Once we select Table Name, click on “Add new Parameter”. This provides a list of columns present in BLOB_Metadata table.

- For each of the column, select appropriate content and in this case it’s from “Add Dynamic content”. For DB2_PARTID column we want to insert PART ID from Azure SQL table. So selected “ResultSets PART_ID” column from Add Dynamic content.

- Map Db2_Pk with Dynamic content of “ResultSets DB2_PK” column from BLOB_TABLE table.

- PATH is the Azure storage account path for respective blob object. In Add dynamic content, under “Create blob” section, pick PATH variable.

- IMAGE_blob_id is for storing the unique id of the blob object in Azure storage account. So in Add Dynamic Content under “Create Blob” activity, pick “Id” variable.

These mapping are provided for reference, based on application need we should be able to add more variables to metadata table and populate appropriate information.

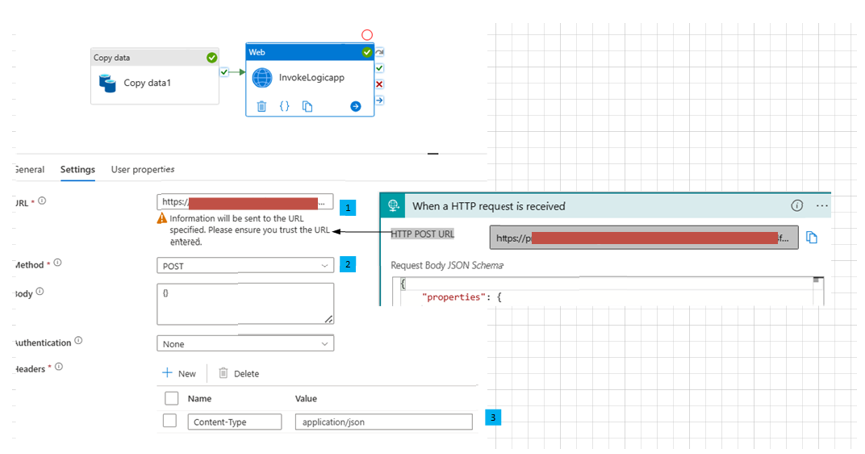

ADF and LogicApp Integration

With this current setup, we should be able to manually execute ADF Copy activity and LogicApp workflow. In this section, want to provide more information on how to integrate ADF with LogicApp in HTTP / API method. Detailed documentation on how to invoke Logicapp via HTTP is available in Microsoft Docs here.

From LogicApp workflow, copy HTTP POST URL of “When a HTTP request is received” activity.

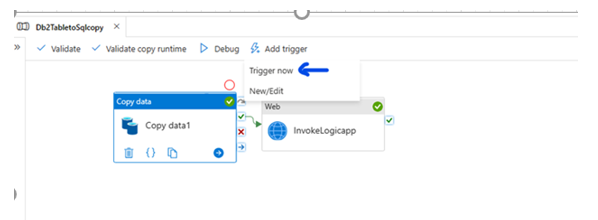

In ADF, attach a web activity to existing Copy Activity and follow below steps.

- Shows the HTTP POST URL and providing the same in ADF URL (under Web Activity / Settings).

- For Method, select POST. As there is No payload in this sample, I have left Body as blank ({}). Same is for Authentication also.

- In header, we are saying that our Content / Payload is Json format. Additional if we need to pass any additional header variables same can be included. If we add any new Header variables, make sure to change the json payload structure on Logicapp also accordingly.

With this if we save and debug / trigger, we should be able to invoke Logicapp automatically after ADF Copy activity is complete.

Sample Results

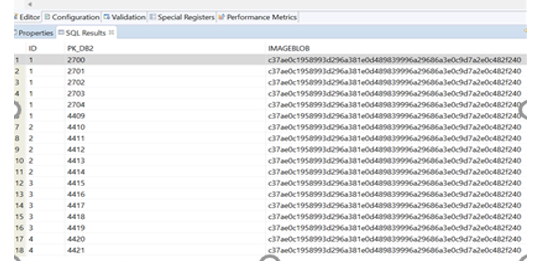

Input Db2 table has 25 rows as shown below. For testing purposes, included same BLOB content across all the 25 rows.

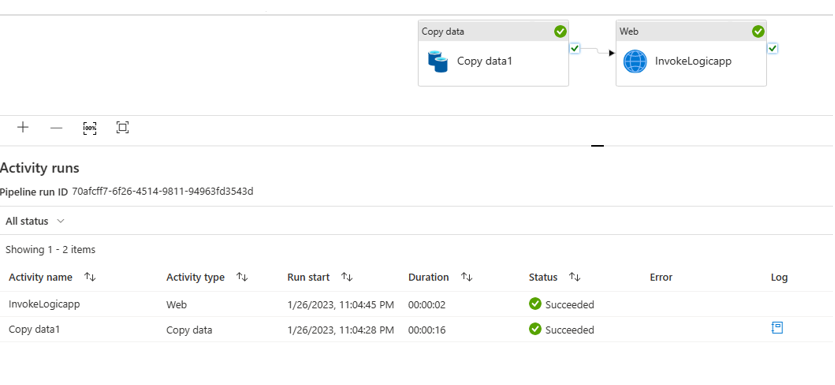

Now Execute ADF pipeline. For testing, here we are using “Trigger Now” in ADF. Based on business requirement, Trigger can be Schedule based, Storage Event based or a Custom Event.

Once ADF pipeline is executed, we should be able to see the status in “Monitor” section.

Now look at BLOB_TABLE table on Azure. As a next step let us validate Logic App.

LOB data in Azure Data Lake Containers

Important points for Consideration

- When we store Db2 LOB data as an blob object, application will require change on referring to blob object in storage account. This article doesn’t cover them.

- The above example is shown to provide information about important steps on how to copy LOB data to Azure. This example is not exhaustive. Actual implementation based on volume, we may include ADF Copy in multiple threads and similarly at LogicApp.

- Monitoring, Logging and Error handling would need to be considered.

- If source Db2 table is partitioned based on size and if partition id is part of table, then the same can be used to invoke parallel threads. Other options to parallelize include date ranges etc.

These decisions need to be taken by considering the source data structure, partitioning and SHIR capacity.

- ADF parallel Copy activity has default limit of 20 and max is 50.

- ADF Copy activities can be generated dynamically to copy over data from multiple Db2 tables here and also splitting copy activity of very large tables into multiple copy activities here.

- Refer to our Technical blog in for generating ADF Copy activities dynamically.

- Example above used Azure SQL DB as target, but your target database on Azure can be different

- LogicApp For_Each has a limit of 100,000, For Logicapp limits refer to this link.

- Once LOB data is moved to Azure storage account and reconciled then table dbo.BLOB_TABLE (in this case) can be dropped.

- The above technical blog provides high-level guidance with no warranties with respect to the information contained herein.

Feedback and suggestions

If you have feedback or suggestions for improving this data migration asset, please contact the Databases SQL Ninja Engineering Team (datasqlninja@microsoft.com). Thanks for your support!

Note: For additional information about migrating various source databases to Azure, see the Azure Database Migration Guide.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.